Our Product Portfolio

All-in-One Turnkey Solutions

Pi-search

Smart Enterprise Search Engine

Our multi-format indexing technology processes all document types to build a unified knowledge repository. Data security is guaranteed through automated purging protocols, complemented by manual export options. Integrated analytics provide detailed usage metrics for ongoing performance optimization.

Secure Agent

The customizable AI assistant

Easy-to-use interface for quick setup, no technical background needed. Comprehensive access management & SSO with built-in integration for your existing workflows.

Custom Models

The expertise in specialized models

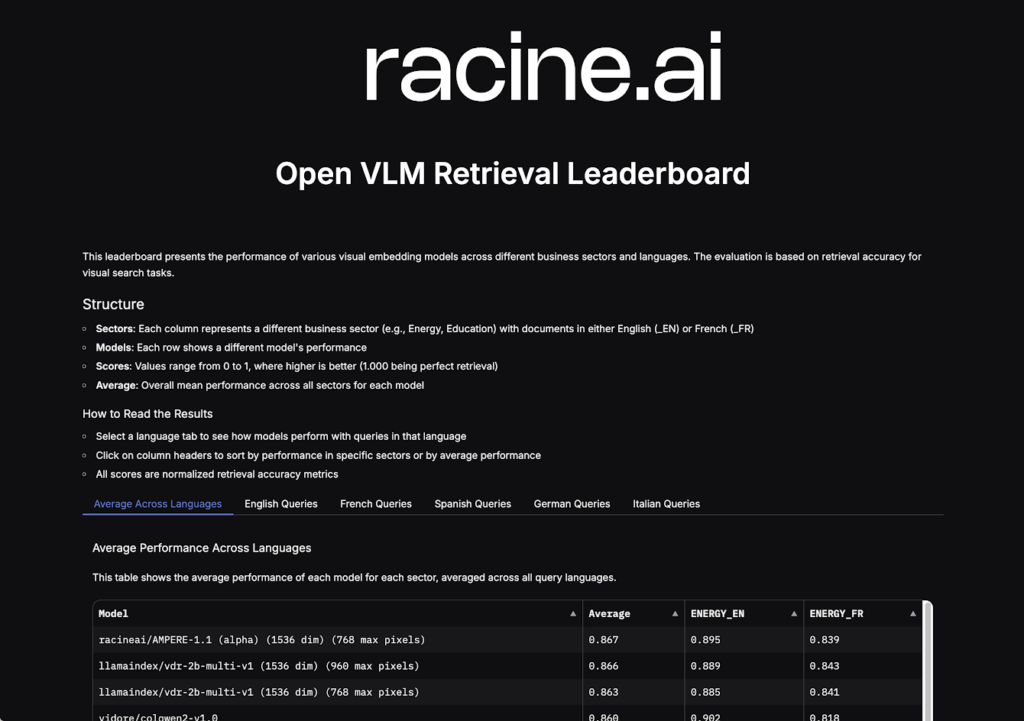

Our expertise in developing custom model architectures enables us to adapt our solutions to each sector. Our flagship model “Le Triomphant” achieved benchmark supremacy among open-source Large Language Models on the Hugging Face leaderboard in May 2024.

These empirical evaluations enable to continuously refine our architectures and optimize performance according to industry-specific requirements.

| Deployment Time | 48 hours |

| Cost reduction* | 50% per user |

| Published models | +30 on Hugging Face |

| Download Volume | +200,000 |

*By optimizing the use of LLMs based on use case typology, compared to standard market subscriptions (€20-30/month).

FAQ

What is Pi-search?

Pi-search develops a next-generation internal search engine combining three essential elements: intelligent indexing adapted to different types of business documents, an advanced semantic search system, and sophisticated knowledge base management. The objective is to optimize access to information while ensuring efficient exploitation of business knowledge.

How can Pi-search solve information search problems in my company?

Imagine being able to instantly find any information across all your company documents, even if you don’t know the exact words to search for. Pi-search goes beyond simple keyword search: it understands context, analyzes images and graphics, and can even make connections between different documents. It’s like having an expert who would know all your documents and could instantly find relevant information.

Why do I need a Secure Agent in my company?

The Secure Agent is designed for companies that want to benefit from conversational AI without compromising their security. It combines the advanced capabilities of LLMs with strict data control. For example, if an employee asks a question involving sensitive data, the agent will automatically use an on-premise model rather than a cloud model, while ensuring a smooth user experience.

What is a router?

One of Racine’s distinctive features lies in its “smart routing” approach: rather than using a single model for all tasks, RACINE.AI automatically selects the most appropriate model based on the complexity of the request. This allows for cost optimization (using lighter models for simple tasks) while ensuring performance (mobilizing more powerful models when necessary).

How can specialized models bring value to my industry sector?

Generalist models are like Swiss army knives: they do everything, but not always optimally. Our specialized models are specifically trained for your industry sector, with its vocabulary, standards, and specificities. For example, a model specialized in geotechnics will naturally understand technical terms that a generalist model might misinterpret.

What is the difference between a classic conversational agent and your Secure Agent?

A classic conversational agent generally uses a single model and handles all requests in the same way. Our Secure Agent dynamically adapts its behavior: it can use different models depending on data sensitivity, finely manage authorizations, and ensure traceability of exchanges. It’s like having an assistant that automatically adapts to the required level of confidentiality.

How do I start implementing these solutions in my company?

Implementation follows a progressive approach: we start by identifying your specific needs, then we deploy solutions in a modular way. For example, you can start with PI-search to organize your knowledge, then gradually add the Secure Agent to automate certain tasks.